Portfolio

-

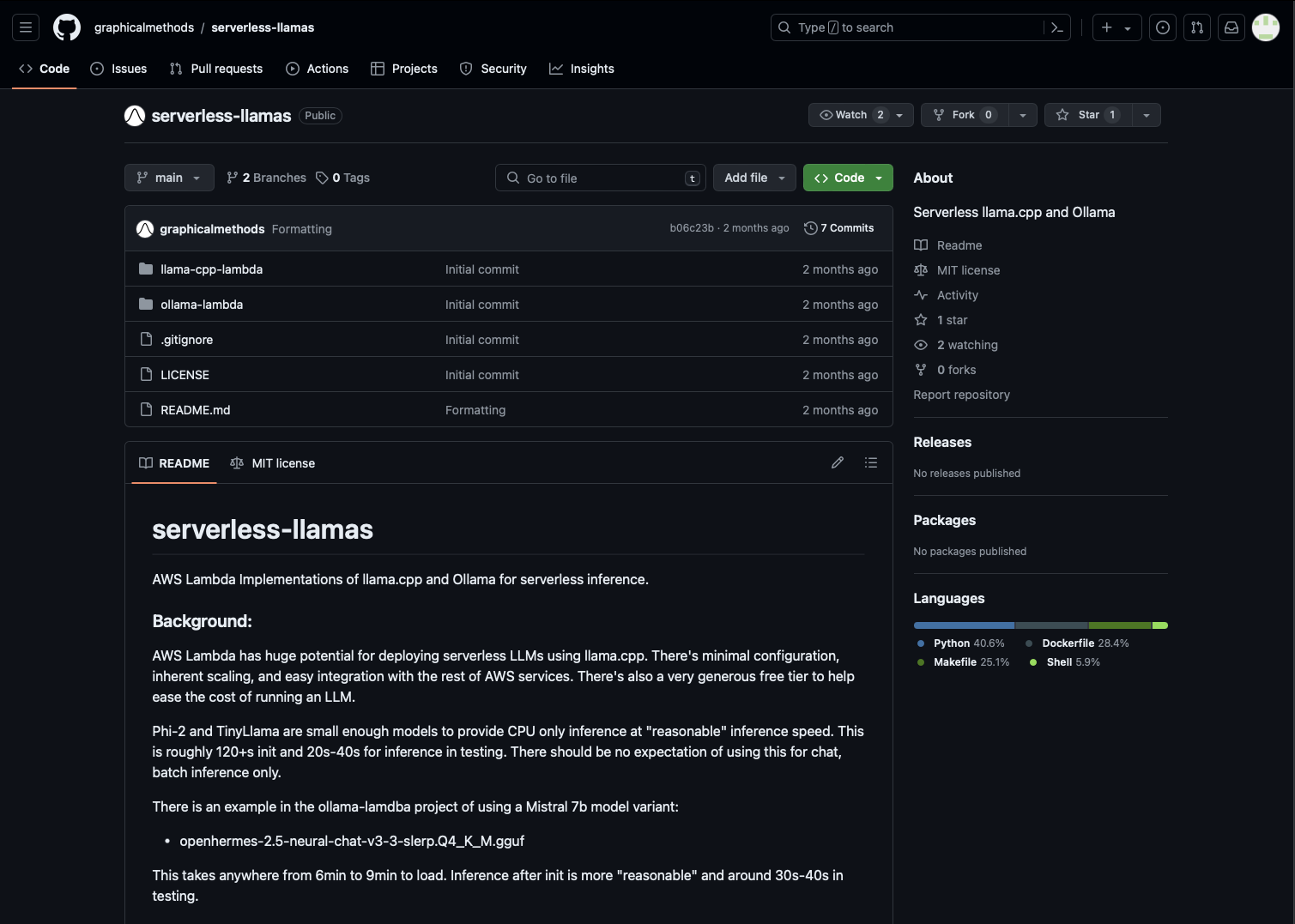

Serverless LLMs

GitHub repository for deploying Large Language Models (LLMs) on AWS Lambda using serverless architecture. Provides implementations of llama.cpp and Ollama for serverless inference, allowing users to deploy LLMs with minimal configuration, scaling, and easy integration with other AWS services. -

AI Perspectives

AI Project for good! Using AI agents generated feedback on community affects and potential recommendations. Also highlighting the biases in AI generated output. See if you believe it.